Real-time Analytics: Airflow + Kafka + Druid + Superset

Real-time analytics has become a necessity for large companies around the world. When your data has been analyzed in a streaming fashion that allows you to continuously analyze customer behavior and act on it. I also want to test Druid real time capability, i am looking for realtime analytics solution. This blogs give an introdution to setting up streaming analytics using open source technologies. All code has been uploaded repo Github. Vietnamese version can be read at Vie.

Airflow

In this blog using Aiflow A task scheduling platform that allows you to create, orchestrate and monitor data workflows. In this case using like a producer sent data to kafka topic.

Kafka

Kafka is a distributed messaging platform that allows you to sequentially log streaming data into topic-specific feeds, which other applications in turn can tap into. In this setup Kafka is used to collect and buffer the events, that are then ingested by Druid.

Druid

Druid Apache-Druid provides low-latency real-time data ingestion from Kafka, flexible data exploration, and rapid data aggregation. The Druid is not considered a data-lake but rather a data-river. Since the data is generated by the user, sensor or whatever, it will work in the foreground application. As with the Hive/Presto setup, data is typically available hourly or daily, but with Druid, the data is available to query upon accessing the database. Druid rates it as a 90%-98% speed improvement over Apache Hive (untested).

Superset

Apache SuperSet is an Open Source data visualization tool that can be used to represent data graphically. The Superset was originally created by AirBnB and later released to the Apache community. Apache Superset is developed in Python language and uses Flask Framework for all web interactions. Superset supports the majority of RDMBS through SQL Alchemy.

How to it works?

Let’s set up an example of a realtime coin price analysis system based on Airflow-Kafka-Druid-Superset. Using Docker, it’s easy to set up a local instance and make it easier to try and explore your ideas.

- To setting system, start by

clonethe repo git.

# Clone my repo from github

git clone https://github.com/apot-group/real-time-analytic.git

# Goto root folder

cd real-time-analytic

- Next, we need to build the

localimages.

# build image by docker-compose

docker-compose rm -f && docker-compose build && docker-compose up

- Infos of services:

Server Name | Host | Username/Password |

--------------|-------------------------|---------------------------------------------|

Druid Unified | http://localhost:8888/ | None |

Druid Legacy | http://localhost:8081/ | None |

Superset | http://localhost:8088/ | docker exec -it superset bash superset-init |

Airflow | http://localhost:3000/ | admin - app/standalone_admin_password.txt |

- Note that for the Airflow user is admin and the password will be automatically generated at the a-airflow directory path /app/standalone_admin_password.txt after the server running. As for the Superset, it is necessary to go to the running container and execute the command unit to create the user with the tag:

$ docker exec -it superset superset-init

Username [admin]: admin

User first name [admin]: Admin

User last name [user]: User

Email [admin@fab.org]: admin@superset.com

Password:

Repeat for confirmation:

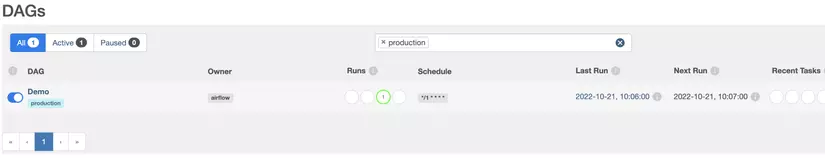

- Airflow Scheduler app_airflow/app/dags/demo.py is configured to run once a minute executing a message to the Kafka demo topic with list and price coin data [‘BTC’,‘ETH’,‘BTT’,‘DOT’] The structure of the message data is as below, I just randomize the price for simplicity. To start streaming, sign in to airflow and

enabledemo dags.

{

"data_id" : 454,

"name": 'BTC',

"timestamp": '2021-02-05T10:10:01'

}

- Note: you also can using python to run

producer.pylike alternative airflow demo dags.

$ python3 producer.py

Producing message @ 2022-10-22 12:29:20.479806 | Message = {'data_id': 100, 'name': 'BTC', 'timestamp': 1666391360}

Producing message @ 2022-10-22 12:29:20.482750 | Message = {'data_id': 23, 'name': 'ETH', 'timestamp': 1666391360}

Producing message @ 2022-10-22 12:29:20.482898 | Message = {'data_id': 32, 'name': 'BTT', 'timestamp': 1666391360}

Producing message @ 2022-10-22 12:29:20.482991 | Message = {'data_id': 158, 'name': 'DOT', 'timestamp': 1666391360}

- Configure Druid to receive streaming

From Druid Service http://localhost:8888/ select load data > kafka enter information kafka server kakfa:9092 and topic demo and config output.

- Setup supperset connect to druid like datasource

Login into Superset http://localhost:8088/ create new database Data > Databases > + Database connection on Druid using sqlalchemy uri: druid://broker:8082/druid/v2/sql/ for detail can read at Superset-Database-Connect

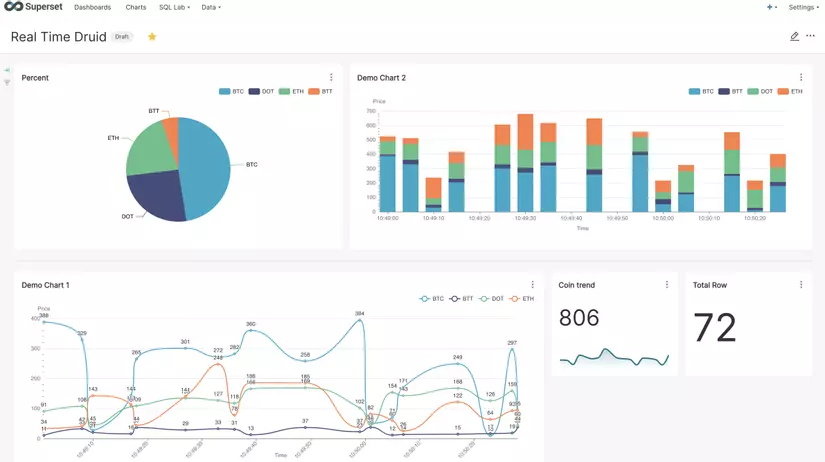

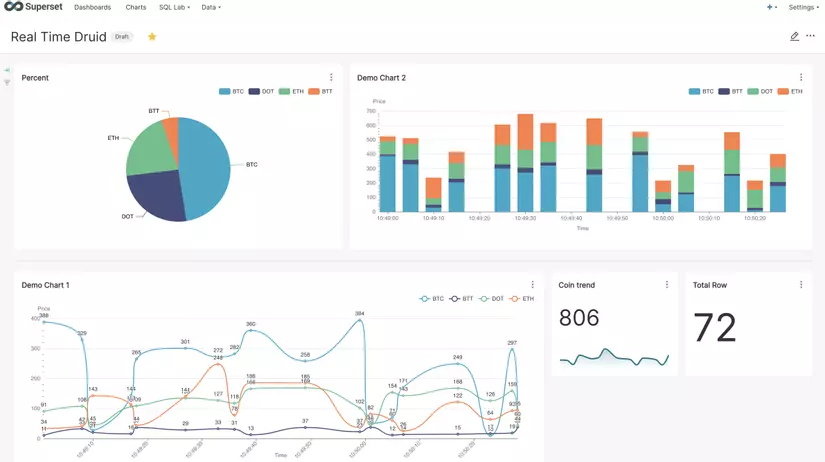

- Create dashboard

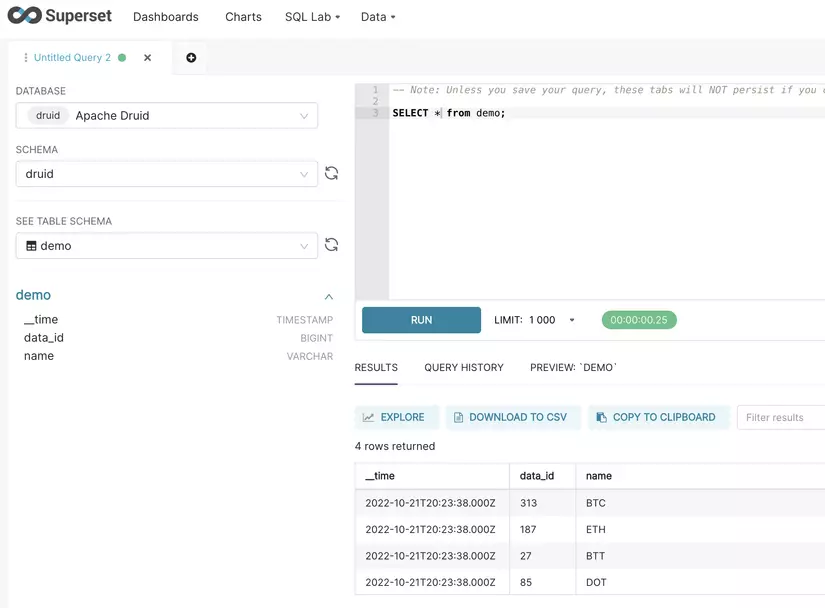

To create dashboards with superset. Go to SQL Lab > SQL Editor select the database as druid , schema druid , table demo and execute the query you need. Once done, click on Explore select your chart and publicizes it as a dashboard.

Finally enjoy it! 🔥 🔥